It is really interesting the way some apps have a pleasant performance no matter how heavy the load is, while others get very slow. The marvel of AWS Auto Scaling is the reason behind this. It is similar to having an intelligent assistant who quickly and productively adds or eliminates resources for your application depending on the size of the audience. AWS Auto Scaling will always keep your application running smoothly, whether it suddenly gets a hundred new users or it’s a quiet time, without the need for continuous monitoring or manual server adjustments.

AWS Auto Scaling is really a resource management solution that can guarantee cost savings. Its dynamic capacity planning during the application delivers real-time demand through the user experience.

AWS Auto Scaling is not only about resources; it also provides a combination of AWS services like Elastic Load Balancing, EC2, and ECS, which makes it a complete solution for application scaling in AWS.

How Does Automated scaling Simplify Application Performance?

Automated scaling guarantees application performance by making sure there are always enough computing resources available to meet the demand. The system adds instances automatically when there is an increase in traffic to manage the load, thus avoiding slow response times or even downtime.

Moreover, Auto Scaling works perfectly with the monitoring tools that evaluate the performance metrics in real time. It means that the scaling actions can be based on accurate information like CPU utilization or the number of requests. Consequently, resource distribution is always in accordance with the actual load, thereby ensuring that AWS performance is always at its best.

Besides that, the use of Auto Scaling and Elastic Load Balancing AWS together guarantees that the traffic is distributed uniformly across all the active instances. This not only prevents any one instance from getting overloaded but also ensures that the whole system operates at its best.

How Does Automated scaling Contribute To Cost Optimisation?

Automated scaling is a cost-productive solution as it adjusts resources immediately based on the exact demand, thus doing away with overprovisioning. It is not the case that applications are merely running at the highest level of capacity all the time, they are scaling automatically up in the times of peak hours and down when demand drops.

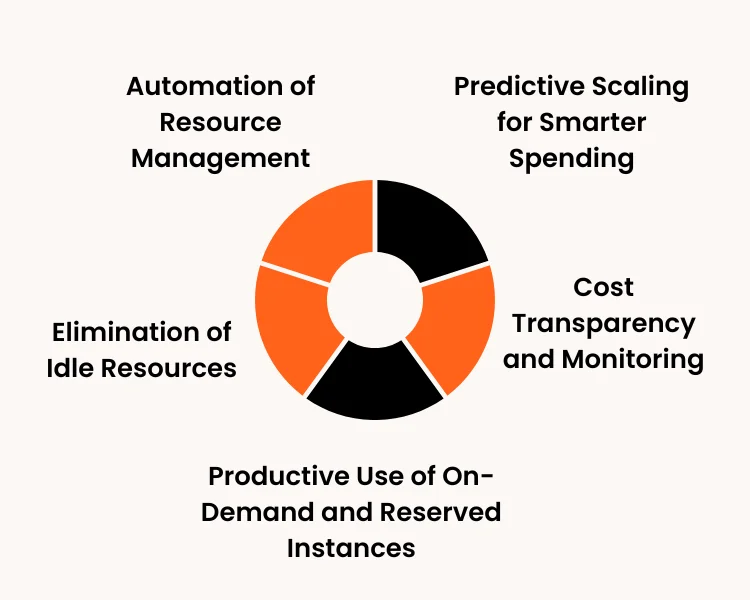

1.Elimination of Idle Resources

Auto Scaling does not allow any resource to be idle since it automatically decommissions the instances that are least utilized. This move is a big plus to the operational costs which are at the same time being kept low since applications are always responsive and available when required.

2. Productive Use of On-Demand and Reserved Instances

Auto Scaling applies a smart mix of On-Demand and Reserved Instances to get a better cost management. By reserving capacity for the stable load and on-demand instances during spikes, companies can be the winner in terms of efficiency.

3. Predictive Scaling for Smarter Spending

Predictive scaling looks into the past to analyze data and makes predictions about future traffic trends. It allocates resources beforehand which means there will be neither under-provisioning nor unnecessary spending during low-traffic times.

4. Automation of Resource Management

Resource management is made automated with Auto Scaling. It not only minimises human errors but also lowers administrative overhead and grants financial efficiency regardless of the performance level being maintained.

5. Cost Transparency and Monitoring

The use of integrated monitoring tools helps to get detailed knowledge about cost distribution of various workloads. Such knowledge allows the respective companies to adjust their scaling policies more accurately and hence get to incur less cloud costs.

What Metrics Are Commonly Used To Trigger Automated scaling Actions?

Auto Scaling actions are based on a set of metrics that have been predetermined and that represent the performance of the system and the demand for its workload. The most usual metric is the CPU utilization, which shows the extent of processing power that is being consumed. Once the CPU usage crosses the threshold, then extra instances are deployed to cope with the load.

Network throughput is another metric of importance that shows the total amount of data going in and out of the instances. Large incoming or outgoing data transfers could be a sign of an increase in demand, thus scaling actions are taken to keep the application responsive. In addition, other metrics like memory usage, disk I/O, and request count per target are also checked frequently to prevent performance from fluctuating.

Moreover, custom metrics are available through Amazon CloudWatch that can be defined for the purpose of scaling behavior being specific application-oriented. For instance, metrics such as user session count or queue length can help in devising scaling policies.

How Does Automated scaling Help Prevent Performance Bottlenecks?

Performance bottlenecks happen when applications struggle to cope with increased demand and thus slow down and may even go offline. However, Auto Scaling comes to the rescue by automatically adding resources to the system even before the demand crosses the limit. This ensures that the failure won’t be caused by any single server or component. Once the load is normalized, the extra resources are taken back immediately to continue the equilibrium and the efficiency.

Besides that, Auto Scaling operates in unison with load balancing, which helps in even distribution of workloads. This avoids the problem of vice versa traffic flow, wherein some instances get bogged down with too much work while there are others that are hardly utilized.

Finally, it is the combined efforts of monitoring and feedback loops that allow continuous optimization. Real-time performance data not only quiets but also guides scaling decisions, thus making applications more agile and responsive.

What Are The Different Types Of Auto Scaling In AWS?

Auto Scaling was trying to be the hero of the AWS cloud. The various types presented could give the different workloads their required control and at the same time, support the different operational strategies of the company in a resource-productive manner.

- Dynamic Scaling – one automatically scales up or down the resources according to the present demand metrics for instance CPU or network, hence making the scaling very fast and adaptive.

- Scheduled Scaling – this one sets up the scaling process so that at certain times it will happen automatically, which is ideal for loads that are predictable such as the increase of users during office hours.

- Predictive Scaling – this method applies machine learning to analyze and predict the upcoming resource demand and then it allocates the required resources beforehand so the users will not feel the load increase.

- Target Tracking Scaling – it keeps a certain performance metric (for example, 50% CPU utilization) by very dynamically adjusting the number of instances, given the situation, in such a way that the metrics are always within the target thresholds.

- Step Scaling – it is done in a very controlled way—decreasing or increasing gradually based on the metric thresholds—and thus avoids the scenario of overreacting to the temporary traffic increments.

Conclusion

Automated scaling booms in the digital world, thus, being a necessary means for all-time application performance and efficiency in operations. By allocating computing resources intelligently according to the need, it not only simplifies the quality of the user’s experience but also guarantees the best possible cost use.

Revolution AI, the cloud-based scaling and optimisation provider, invents and offers automation technologies which is to be the best for the organisations. Through the excellent implementation and wise management, Revolution AI helps companies set up infrastructures that are resilient, scalable, and high-performing, which in turn, allows the companies to grow with their needs.

Frequently Asked Questions

The key objective of auto scaling is to regulate computing resources automatically in accordance with the real-time demand.

Automatic scaling contributes to the operational efficiency by always providing the applications with just the right amount of resources.

Certainly, it is perfect for both small startups and growing giants. The smaller companies will be able to take control of their costs and, at the same time, the growing companies will be able to attract more visitors without worrying about their performance or going through the manual process of scaling up.

It is necessary to study workload patterns, performance thresholds, and resource needs very closely before implementing a scaling policy. Proper monitoring and testing guarantee that the scaling actions will be productive, economical, and in tune with the company’s goals.

Hemal Sehgal

Introducing Hemal Sehgal, a talented and accomplished author with a passion for content writing and a specialization in the blockchain industry. With over two years of experience, Hemal Sehgal has established a strong foothold in the writing world, c...read more